I am… a final-year PhD student at MIT with Antonio Torralba and Phillip Isola. I work on learned representations, decision-making, and reasoning.

Research. I am interested in the two intertwined paths towards generalist agents: (1) agents trained for multi-task (2) agents that can adaptively solve new tasks. My research learns structured representations that aggregate and select information about the world from various data sources, improving efficiency and generality of decision-making agents.

Outside research, I worked on building PyTorch (version 0.2 to 1.2),

developing 1st offering of the MIT Deep Learning course, writing

open-source ML projects

Email: tongzhou _AT_ mit _DOT_ edu

Selected Publications (full list)

| Structured representation for better agents | What is machine learning really learning? |

|

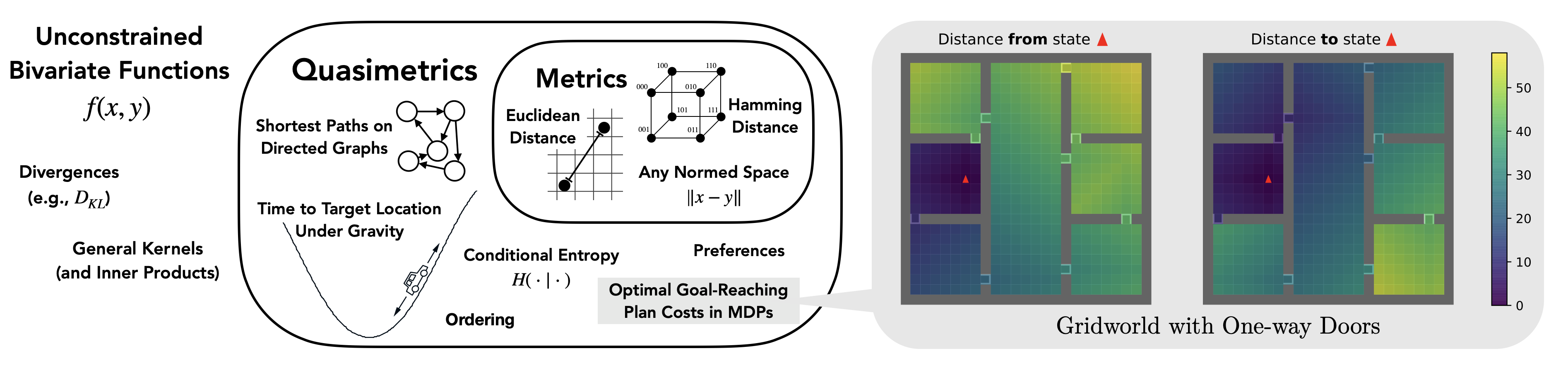

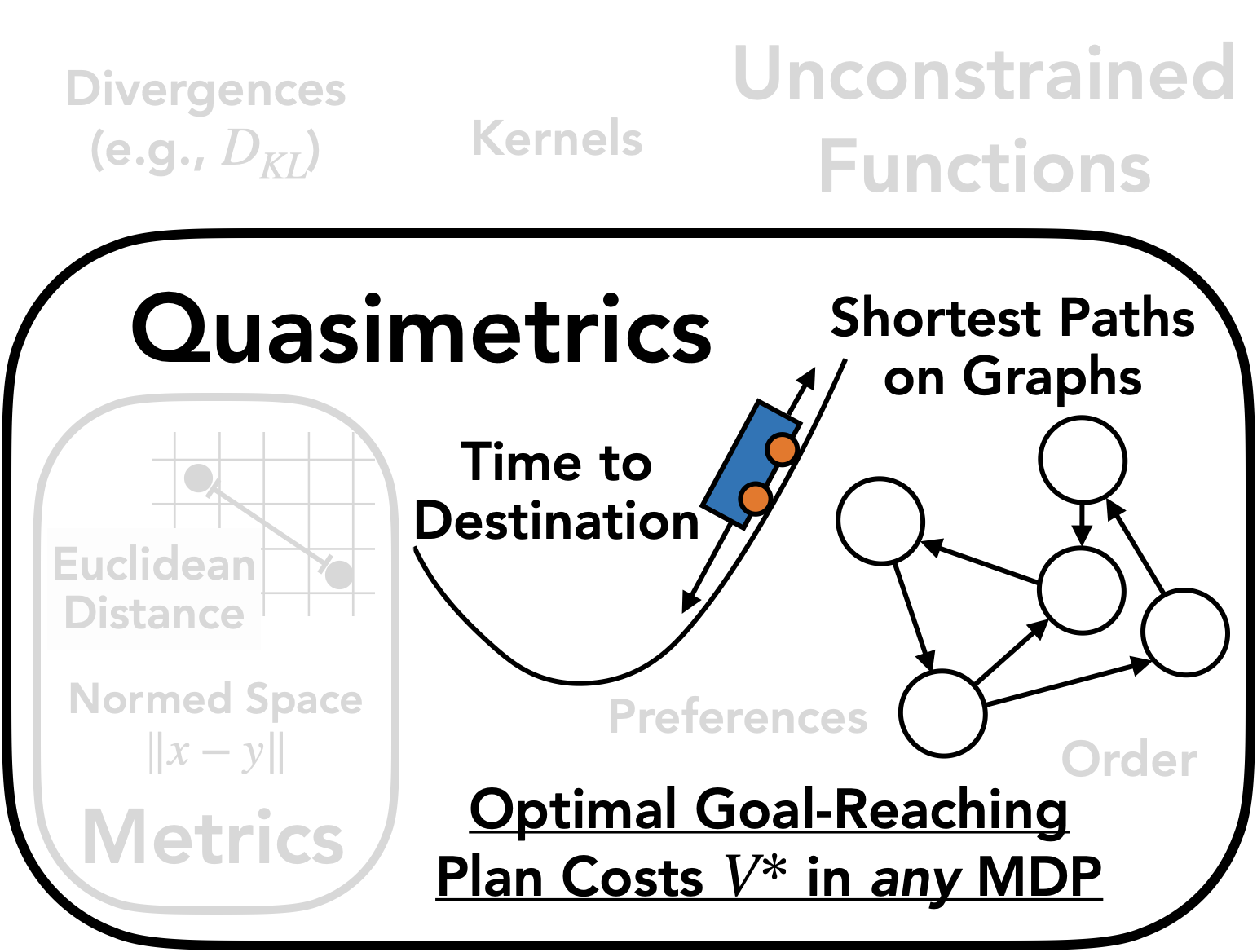

Optimal Goal-Reaching Reinforcement Learning via Quasimetric Learning [ICML 2023][Project Page] [arXiv] [Code] Tongzhou Wang, Antonio Torralba, Phillip Isola, Amy Zhang |

|

|||||

|

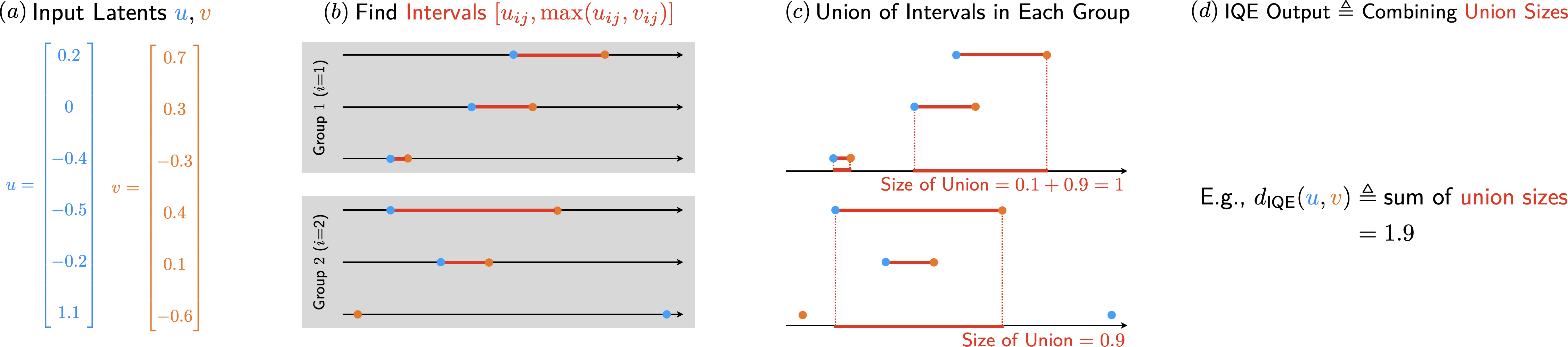

Improved Representation of Asymmetrical Distances with Interval Quasimetric Embeddings [NeurIPS 2022 NeurReps Workshop] [Project Page] [arXiv] [PyTorch Package for Quasimetric Learning] Tongzhou Wang, Phillip Isola |

|

|||||

|

Denoised MDPs: Learning World Models Better Than The World Itself [ICML 2022] [Project Page] [arXiv] [code] Tongzhou Wang, Simon S. Du, Antonio Torralba, Phillip Isola, Amy Zhang, Yuandong Tian |

||||||

|

On the Learning and Learnability of Quasimetrics [ICLR 2022] [Project Page] [arXiv] [OpenReview] [code] Tongzhou Wang, Phillip Isola |

|

|||||

|

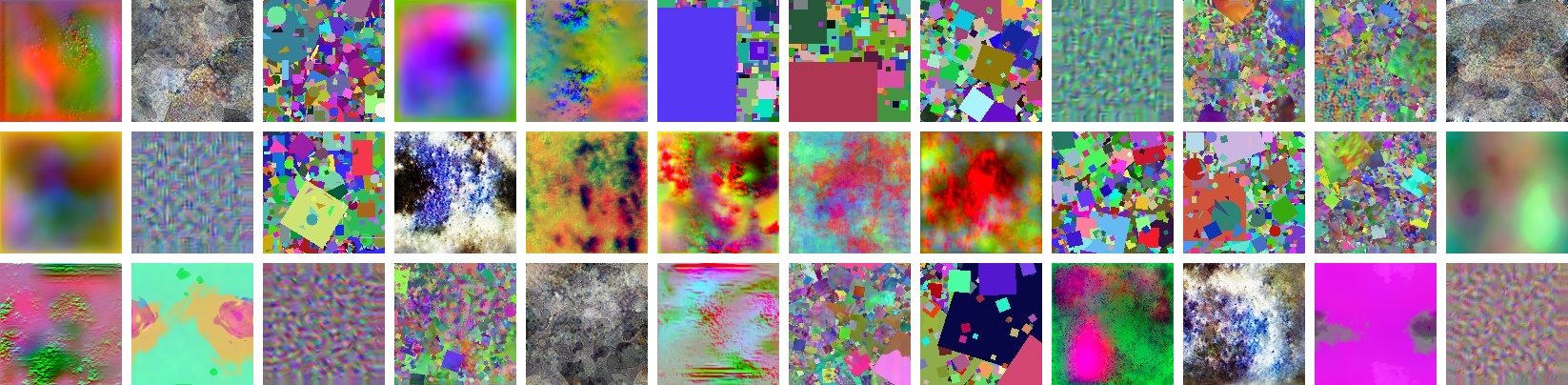

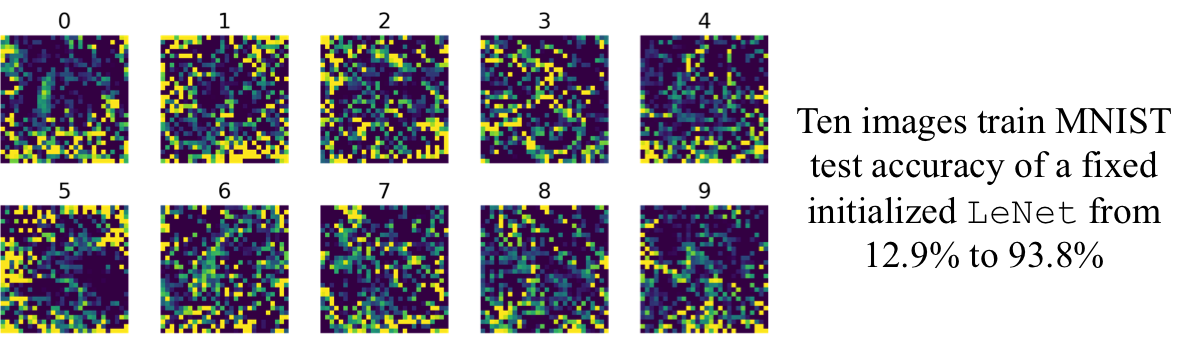

Learning to See by Looking at Noise

[NeurIPS 2021] [Project Page] [arXiv] [code & datasets] Manel Baradad*, Jonas Wulff*, Tongzhou Wang, Phillip Isola, Antonio Torralba |

|

|||||

|

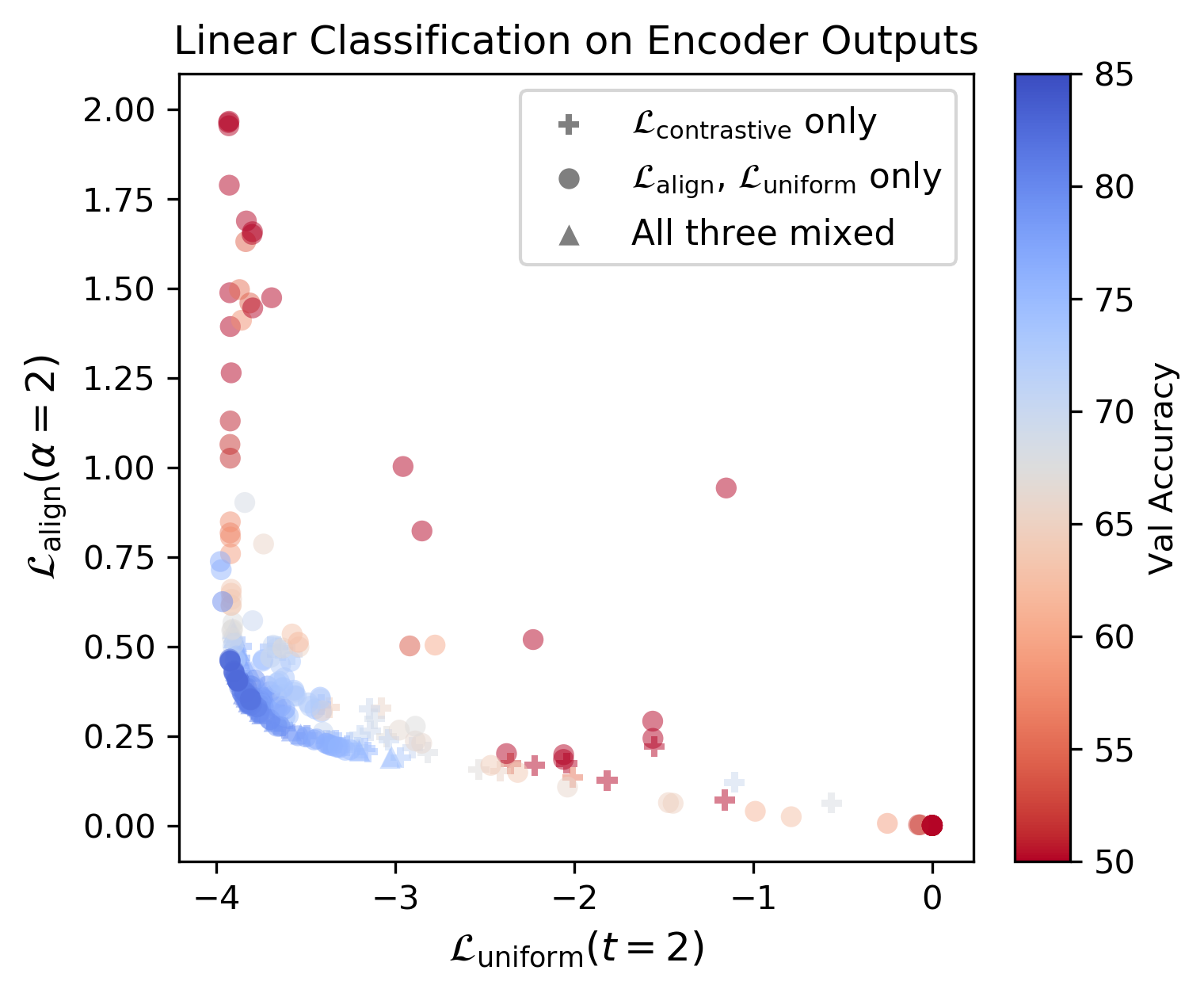

Understanding Contrastive Representation Learning through Alignment and Uniformity on the Hypersphere [ICML 2020] [Project Page] [arXiv] [code] Tongzhou Wang, Phillip Isola |

# bsz : batch size (number of positive pairs) # d : latent dim # x : Tensor, shape=[bsz, d] # latents for one side of positive pairs # y : Tensor, shape=[bsz, d] # latents for the other side of positive pairs def align_loss(x, y, alpha=2): return (x - y).norm(p=2, dim=1).pow(alpha).mean() PyTorch implementation of the alignment and uniformity losses

|

|||||

|

Dataset Distillation [Project Page] [arXiv] [code] [DD Papers] Tongzhou Wang, Jun-Yan Zhu, Antonio Torralba, Alexei A. Efros |

|

Quasimetric Geometry

Quasimetric Geometry